28 Aug 2016

One of the reasons I use F# so much is that it’s an awesome scripting language to Get Stuff Done. Case in point: this blog. I recently decided to switch from BlogEngine.NET to Jekyll, which meant porting over nearly 9 years of blog posts (about 300), extracting html-formatted content from SQL and converting it to markdown. After a couple of weeks of manual process, I realized that at the current cadence, it would take me about a year to complete, and that by then I would probably have lost my mind out of boredom. Time for some automation with F# scripts!

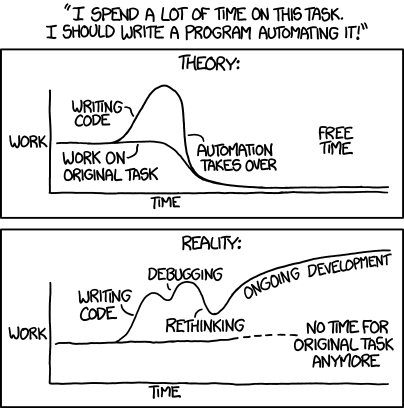

Source: xkcd

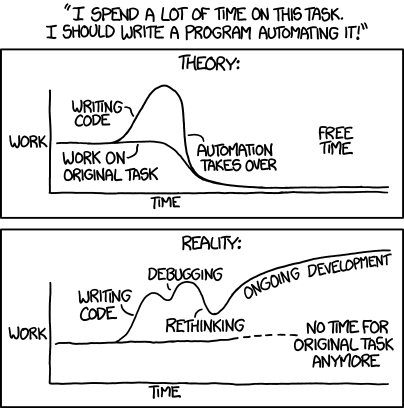

Source: xkcd

More...

14 Aug 2016

In our previous installment, we began exploring Gradient Boosting, and outlined how by combining extremely crude regression models - stumps - we could iteratively create a decent prediction model for the quality of wine bottles, using one Feature, one of the chemical measurements we have available.

In and of itself, this is an interesting result: the approach allows us to aggregate mediocre indicators together into a predictor that is better than its individual parts. However, so far, we are using only a tiny subset of the information available. Why restrict ourselves to a single Feature, and not use all of them? And, if the approach works with something as weak as a stump, perhaps we can do better, by aggregating less trivial prediction models?

This will be our goal today: we will create a Regression Tree, which we will in a future installment use in place of stumps in our Boosting procedure.

More...

06 Aug 2016

I have recently seen the term “gradient boosting” pop up quite a bit, and, as I had no idea what this was about, I got curious. Wikipedia describes Gradient Boosting as

a machine learning technique for regression and classification problems, which produces a prediction model in the form of an ensemble of weak prediction models, typically decision trees.

The page contains both an outline of the algorithm, and some references, so I figured, what better way to understand it than trying a simple implementation. In this post, I’ll start with a hugely simplified version, and will build up progressively over a couple of posts.

More...

24 Jul 2016

After quite some time on the road, I am finally back in San Francisco. My last stop on the way back was Portland, for the DotNetFringe conference. I’ll be totally honest: after 2 months away from home, I was a bit wiped out, and looking forward to some quiet time in my own place - not necessarily the best mindset heading to a conference. However, as it turns out, I ended up having a fantastic time there, and came back with a nice boost of energy.

More...

23 Apr 2016

I have been spending quite a bit of time lately with MBrace, a wonderful library that allows you to scale data processing or run heavy work-loads on a cloud cluster, using simple F# scripts. The library is very nicely documented, and comes with a Starter Kit project that contains all you need to provision a cluster, together with many scripts illustrating various use cases.

This is great, but… if you just want to play with the library and get a sense for what it does, it might be a bit initimidating. Furthermore, not everyone has an Azure subscription ready, which creates a bit of friction. So I figured, let’s try to create the smallest possible project that would allow someone to try out MBrace, without any Azure subscription needed.

More...